Introduction

Nowadays, Kubernetes has become the de facto platform to run and manage containerized applications and services. On the other hand, cloud providers provide a rich catalog of managed services, ready to use.

Kubernetes services and managed services are often used in conjunction to build a product or service.

A security pilar is to make sure communications are secure between services, and for exposed endpoints. One of the first step to achieve this is using TLS Certificates for communication.

The problem we will be solving in this post is how to seamlessly distribute certificates from your Kubernetes cluster to be used on Managed services. And we will be focusing on the case of AWS.

Technical setup

The case we will be focusing on is the following:

- A Kubernetes cluster deployed on AWS (EKS)

- Certificates are generated by

cert-manager, running on the Kubernetes cluster - Optionally, a demo service (podinfo) exposed via an ingress controller and using a wildcard TLS Certificate

What we need to achieve:

- We want to generate wildcard certificates are generated on the Kubernetes cluster by cert-manager. These will be used to secure our Kubernetes Ingresses.

- We need these same wildcard certificates to be used with an AWS OpenSearch distribution

- We need to import these wildcard certificates into AWS Certificate Manager for seamless integration with OpenSearch

The same process can be applied for services that integrates with ACM (AWS Certificate Manager). You can find the list of integrated services here: https://docs.aws.amazon.com/acm/latest/userguide/acm-services.html.

The main idea here is that, once your certificates are on ACM, you’re ready to use them with other AWS services.

What is missing

Generating certificates in a Kubernetes cluster can be achieved via cert-manager. And once certificates are available on ACM, we can use them with other AWS services.

Obviously, the missing part here is how do we get our certificates to ACM from Kubernetes ?

Enters kube-cert-acm

kube-cert-acm is a Kubernetes tool that fills exactly this gap. The concept is fairly simple:

- You configure a list of certificates you want to import to ACM from your Kubernetes cluster

kube-cert-acmimports these certificates to ACM, and continues to watch them. If a Certifcate is renewed or updated, the change is reflected to ACM.

I personally had this problem in a project, where I have been using cert-manager to generate wildcard certificates for Kubernetes services. I had also an OpenSearch distribution with Kibana on which I needed the same wildcard certificate that was stored as a Secret on the Kubernetes cluster.

In the next few lines, we will be going through the setup and configuration of this tool in an EKS cluster.

kube-cert-acm: Configuration and install

Requirements

In addition to our EKS cluster, we will be needing:

-

IAM Roles for Service Accounts (IRSA) configured for your EKS cluster:

This will allow us to have an IAM role attached to our Kubernetes Service Account and have only the required privileges for

kube-cert-acm. This is the most recommended way to provide fine-grained access to a Kubernetes workload.IRSA is beyond the scope of this blog post. For details, refer to the AWS documentation on IAM Roles for Service Accounts

-

Installed and configured cert-manager

A

cert-managerrunning on the cluster and configured to generate certificates. We will configure a Let’s EncryptClusterIssuerfor our tests. The Let’s Encrypt Staging server will be used. -

Wildcard TLS Certificates generated by cert-manager

Wildcard TLS Certificates generated using the configured Let’s Encrypt Issuer. For demonstration purposes, we will generate two wildcard certificates for two subdomains, in two different namespaces:

*.dev.cloudiaries.comon dev namespace*.demo.cloudiaries.comon demo namespace

-

Optionally, a demo service with an Ingress resource for which we’ll be generating a TLS Certificate

We will be installing podinfo as a demo service. We’ll configure an Ingress and use the generated certificate. This is not mandatory, but it helps play with the certificates and the Ingress configuration.

Now that everything is properly configured, and certificates are generated and stored in our cluster, let’s install and configure kube-cert-acm. We will be installing kube-cert-acm using the Helm Chart.

The GitHub repository for kube-cert-acm is https://github.com/mstiri/kube-cert-acm

The repository contains

- The source code for the project

- The Dockerfile to build the container.

- The Helm Chart

Containers are build for each version and published to a public Docker Hub registry: https://hub.docker.com/r/mstiri/kube-cert-acm/tags

A Helm Chart package is built for each version and published to the public Helm repository: https://mstiri.github.io/kube-cert-acm

We will be adopting an IaC approach for installing and configuring our services. Everything will be done using Terraform.

Configuration

Let’s start by configuring the IAM role to be used with our Kubernetes service account: First of all, the policy document which defines the actions allowed for our deployment:

data "aws_iam_policy_document" "kube_cert_acm" {

count = var.kube-cert-acm.enabled ? 1 : 0

statement {

actions = ["sts:AssumeRole"]

resources = ["*"]

}

statement {

actions = [

"acm:ListCertificates"

]

resources = ["*"]

}

statement {

actions = [

"acm:ExportCertificate",

"acm:DescribeCertificate",

"acm:GetCertificate",

"acm:UpdateCertificateOptions",

"acm:AddTagsToCertificate",

"acm:ImportCertificate",

"acm:ListTagsForCertificate"

]

resources = ["arn:aws:acm:${var.region}:${var.account_id}:certificate/*"]

}

}

This document will be used to create our IAM policy:

resource "aws_iam_policy" "kube_cert_acm" {

count = var.kube-cert-acm.enabled ? 1 : 0

name = "kube-cert-acm-policy-${var.eks.cluster_id}"

policy = data.aws_iam_policy_document.kube_cert_acm[count.index].json

}

Finally, we will create an IAM role. This role should have a trust relationship that will allow it to be assumed only by the Kubernetes service account of kube-cet-acm from the namespace in which it will be installed.

We will be using the module iam-assumable-role-with-oidc to create this role.

module "iam_assumable_role_for_kube_cert_acm" {

count = var.kube-cert-acm.enabled ? 1 : 0

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "4.11.0"

create_role = true

number_of_role_policy_arns = 1

role_name = "kube-cert-acm-role-${var.eks.cluster_id}"

provider_url = replace(var.eks.cluster_oidc_issuer_url, "https://", "")

role_policy_arns = [aws_iam_policy.kube_cert_acm[count.index].arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:${var.kube-cert-acm.namespace}:${var.kube-cert-acm.service_account}"]

}

At this point, we’re good on the AWS side. Let’s take care of the Helm Chart install.

Install

We will need to specify the list of Certificates we want to import to ACM in a kube-cert-acm-values.yaml file. In our case, it will be:

certificatesConfig:

certificates_config.yaml: |

- cert: dev.cloudiaries.com

namespace: dev

domain_name: "*.dev.cloudiaries.com"

- cert: demo.dev.cloudiaries.com

namespace: demo

domain_name: "*.demo.cloudiaries.com"

We will use the Terraform Helm provider to install the chart:

resource "helm_release" "kube_cert_acm" {

count = var.kube-cert-acm.enabled ? 1 : 0

name = "kube-cert-acm"

repository = var.kube-cert-acm.helm_repository

chart = "kube-cert-acm"

version = var.kube-cert-acm.chart_version

render_subchart_notes = false

namespace = var.kube-cert-acm.namespace

create_namespace = true

values = [file("${path.module}/kube-cert-acm-values.yml")]

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = module.iam_assumable_role_for_kube_cert_acm[count.index].iam_role_arn

}

set {

name = "aws.region"

value = var.region

}

}

The value of our Terraform kube-cert-acm variable is:

variable "kube-cert-acm" {

default = {

enabled = true

chart_version = "0.0.3"

namespace = "system"

service_account = "kube-cert-acm"

helm_repository = "https://mstiri.github.io/kube-cert-acm"

}

}

You can check the default values of the chart here

At this stage:

- We have certificates in the Kubernetes cluster

- We have installed and configured

kube-cert-acmto sync our certificates to ACM.

Let’s check that everything is working as expected.

The two certificates we created using cert-manager:

kubectl -n dev get certificates

NAME READY SECRET AGE

dev.cloudiaries.com False dev.cloudiaries.com 39s

kubectl -n demo get certificates

NAME READY SECRET AGE

demo.cloudiaries.com True demo.cloudiaries.com 94s

Both certificates have the ready status set to True. This means that the certificates have generated successfully. Let’s check if the secrets have been generated for these certificates:

kubectl -n dev get secret dev.cloudiaries.com

NAME TYPE DATA AGE

dev.cloudiaries.com kubernetes.io/tls 2 47s

kubectl -n demo get secret demo.cloudiaries.com

NAME TYPE DATA AGE

demo.cloudiaries.com kubernetes.io/tls 2 7s

Each of these secrets have a

tls.crt: the actual cetificate (the whole certificate chain)tls.key: The private key.

Let’s now see if kube-cert-acm was able to import these certificates to ACM.

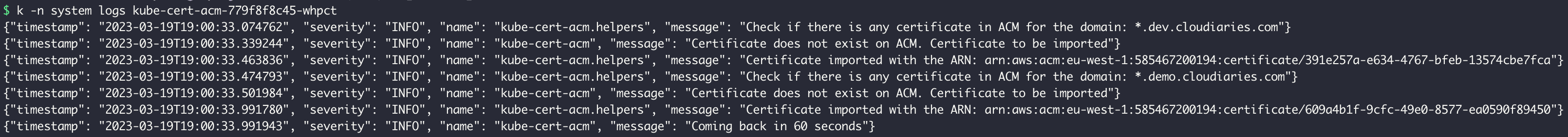

As per the pod logs:

Let’s check from the AWS side:

We can see that our certificates have been imported to ACM.

The kube-cert-acm will continue to check the list of certificates it was configured to watch. If there is a change on the certificate, it is updated accordingly on ACM.

A certificate that is deleted from the cluster does not get removed from ACM. But, a certificate that is deleted from ACM will continue to get imported, as long as the certificate exists in the cluster and kube-cert-acm configured to synchronize it.

These checks are done on a regular basis. The default check interval is 1 minute. This interval can be configured by setting checkIntervalSeconds to the desired value.

Conclusion

The purpose of kube-cert-acm is to sync certificates from the cluster to ACM. It has been developed to fit a specific need I had. However, the project can be extended to support other configurations.

If you find the project useful do not hesitate to star it in GitHub. If you think you can contribute, do not hesitate to create a pull request.